Scalability v.s. Performance

A quick note on the difference between scalability and performance

The terms scalability and performance are sometimes used interchangeably, but they actually mean two entirely different things.

Let’s make sure we understand the difference.

Performance is about how well your application runs for one user. Your application could have only one user, and performance might still be an issue.

How fast do pages load for that one user? Do assets take a while to appear on the page? How long does the user have to wait for a response when they are adding content to your database? Fetching content from the database?

Are there bottlenecks that must be addressed in order to render that user’s experience smoother, and make your application more performant?

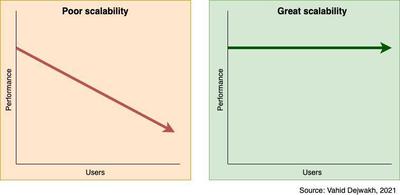

Scalability, on the other hand, is about how that same performance changes as your application gets more and more users. Typically, performance goes down–but by how much, and at what increments? Does performance go down linearly–for example, by 5% for every one thousand new users? That would be poor scalability. Down 2% for every one million users? Better, but still poor.

An application that scales well maintains constant performance no matter how many users are using it. In other words, there’s no correlation between the number of users and the performance level. Whether you have one or one million users, your application’s performance would remain the same.

To improve performance, you can do several things that include web performance optimization (WPO) techniques, database query optimization techniques, caching, and improving latency and other network-related issues like DNS analysis.

Improving scalability, on the other hand, is harder and takes more effort, but generally involves vertically scaling your data stores as much as possible–i.e. improving RAM, CPU, and disk throughput–and then horizontally scaling your application servers first, and then your data stores.

Alas, improving scalability may also require converting your monolith into a microservices-based architecture, and using Docker to easily deploy more instances of your application as you need them.

Scaling might also introduce new components to your system as you go from a three-tier infrastructure to an n-tier infrastructure, with load balancers, caches, and CDNs at various points.